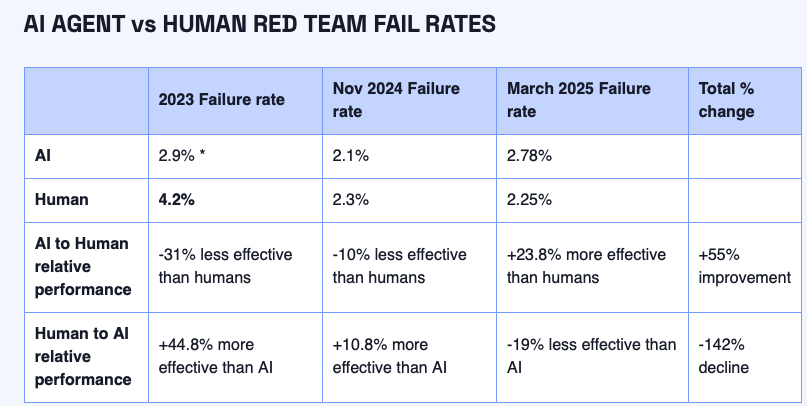

Hoxhunt published a report last month called AI-Powered Phishing Outperforms Elite Red Teams in 2025. It was released in full as a blog post, so read away. No download required, no registration, just click and you’re in. The core assertion in the report is that over the past two years, AI-powered phishing has become more effective at getting a user to click a phishing link than human-written phishing messages do. Here’s the chart:

Look at the “effectiveness rate” in the first two data rows – AI (AI-generated phishing messages) goes from 2.9% in March 2023 to 2.1% in November 2024 to 2.78% in March 2025, and human (phishing messages written by an elite red team) goes from 4.2% to 2.3% to 2.25% over the same three time periods. Data row three calculates the relative differences … from AI being 31% less effective, to 10% less effective, to 23.8% more effective … for a 55% improvement in effectiveness over the three time periods.

Hoxhunt says:

- The absolute failure rate metrics are less informative than the relative performance between the two.

- As its AI models improved, the attacks became more sophisticated and harder to detect.

- It’s only a matter of time until AI agents disrupt the phishing landscape, elevating the current effectiveness rate of AI-powered mass phishing to AI-powered spear phishing.

- Organizations should cease-and-desist on compliance-based security awareness training and embrace adaptive phishing training. Hoxhunt offers the latter.

We say:

- Neat research project. We love the emphasis on pushing the boundaries of how AI impacts phishing in a longitudinal study.

- The absolute failure rates above are actually interesting to us – in addition to the relative change. In terms of absolute failure rates, for human-written phishing messages, we read data row two as saying that people have become almost twice as good (failure rate almost halved from 4.2% to 2.25%) at detecting human-written phishing messages from March 2023 to March 2025. Given the data is drawn exclusively from people trained by the Hoxhunt security awareness training platform, that’s interesting.

- For the trend line in the AI phishing data row, it dropped significantly then jumped again – to almost but not quite as high as the March 2023 rate. So … the March 2023 rate set the high water mark, but people have become better at detecting AI-written messages over the three time periods. If Hoxhunt does another comparative study in 6 months, that data point will be the most interesting one to us. Do AI-generated phishing messages increase in effectiveness against people (e.g., a rate of >2.78%) or do people get better at detecting AI messages (e.g., a rate of <2.78%). This study tested how threat actors could use AI agents to write better phishing messages, but in parallel, non-threat actors are also using AI to write better emails in general. This should lift the quality of communication for all and sundry, so does the change in both smooth out the differences making detection more difficult, or does the increased prevalence of using AI to create near-perfect emails throw off signals that AI was involved.

- It would be even more interesting to have done the same study with another cohort – those trained using what Hoxhunt calls “compliance-based security awareness training” programs.

- In describing the methodology, Hoxhunt says “The experiment involved a large set of users (2.5M) selected from Hoxhunt’s platform, which has millions of enterprise users, providing a substantial sample size for the study” and “the AI was instructed to create phishing attacks based on the context of the user” (e.g., role, country). This is why data breaches are such a menace to the current and future phishing landscape – where threat actors aggregate data breach records to create profiles of potential targets and use AI agents to craft profile-specific phishing attacks.

What do you think?